7 Test Assembly and Deployment

After the previous chapters focused on the creation of individual item projects (i.e. CBA ItemBuilder project files together with one or multiple tasks as the entry points), this chapter is about the use of these item projects for operational test administration and data collection.

7.1 Quick-Start: Assessments using R (and Shiny)

To follow this quick-start tutorial, download and install R and, for instance, R-Studio.76 After this installation open R and install the packages: shiny, remotes, knitr and ShinyItemBuilder:

install.packages("shiny")

install.packages("remotes")

install.packages("knitr")

remotes::install_github("kroehne/ShinyItemBuilder", build_vignettes = TRUE)When both packages are installed, the CBA ItemBuilder projects are required. The project files are expected in the folder provided as argument to the function call getPool(). Additional arguments are possible to define the order if tasks within the item pool (by default, all files and tasks are included, ordered by name).

In the following example, we use the function getDemoPool("demo01")to illustrate the use of the package with example items:

# app.R

library(shiny)

library(ShinyItemBuilder)

# demo items

item_pool <- getDemoPool("demo01")

# your items woudl be loaded via

# item_pool <- getPool(path="PATH-TO-YOUR-IB-PROJECTS")

assessment_config <- getConfig()

shinyApp(assessmentOutput(pool = item_pool,

config = assessment_config,

overwrite=T),

renderAssessment)

The assessment is started by executing the complete file app.R.

The items can be answered in a browser using the URL either started automatically or displayed by R / Shiny (e.g., http://127.0.0.1:PORT)77. More configurations are possible (see section 7.3). If you have an account for shinyapps.io, the assessment can be published directly from RStudio and used for data collections online (see section 7.3 for details).78

7.2 (Technical) Terminology and Concepts

Finding an appropriate test delivery software requires some technical considerations. Before describing the available tools, this section briefly describes some important technical terms and concepts.

7.2.1 Deployment Mode (Online, Offline, Cached and Mobile)

All assessments created with the CBA ItemBuilder require a web browser or browser component for rendering the generated HTML / JavaScript content. Web browsers are available on various devices, including desktop and mobile computers, cell phones, televisions, and game consoles. CBA ItemBuilder items can be used one way or the other on various platforms with built-in modern web browsers.

Online Deployment: The assessment is conducted in a supported web browser, constantly connected to the internet, and data are stored on a web server. Accordingly, some technology is required for hosting the assessment. The required hosting technology depends on the deployment software, including, for instance, R/Shiny (see sections 7.1 and 7.3) or PHP-based web hosting or cloud technologies (e.g., using TAO, see section 7.4), or any server-side technology (e.g., dotnet core used either directly or containerized for the software described in section 7.5).

Offline Deployment: The same software components as for the online deployment (i.e., the assessment is conducted in a web browser, together with a server component), but the server- and browser components run on the same device. Accordingly, items and data are stored only locally, and an internet connection is neither required to prepare nor run the assessment. Data are collected by copying the data from the local devices or by collecting the devices (e.g., thumb drives).

Cached Deployment: The assessment is conducted in a web browser connected to the internet while the assessment is prepared, but no (reliable) network connection is required during the assessment. Hence, data are stored locally, and after the assessment is finished, data are collected either online or by copying the data from local devices.

Mobile Deployment: Mobile deployment is a specific offline or cached assessment conducted on a mobile device. Since mobile devices are used, either a native app or a progressive web app is used (instead of a server), and test execution is possible even if there is no reliable internet connection (i.e., it is a cached deployment on mobile devices). When internet connectivity is available, data can be stored simultaneously online or transferred when connectivity is available.

Mobile deployment is specific in different ways. Firstly, no direct file access is typically possible on mobile devices (instead, data are transferred via the internet). Secondly, an app can stay accessible after installation, allowing the implementation of prompts or notifications that are either offline or triggered via so-called push notifications can try to attract the test-taker’s attention. Third, mobile devices are often equipped with additional sensors that can contribute to the collected data (and paradata) if incorporated by the app.

7.2.2 Browser Requirements and Availability of (Embedded) Content

Since assessments created with the CBA ItemBuilder are always displayed in a web browser or a browser component79, Cross-Browser Compatibility and Browser Requirements need to be considered.

Browser Requirements: Not all browsers support all features, adhere to the usual standards, show HTML, and similarly interpret JavaScript content. A typical approach is, therefore, to test the prepared assessment content in different browsers and then, if necessary, restrict the assessment to specific browser versions.

For rendering the CBA ItemBuilder generated content, the React framework is used internally in the current versions.80 React is supported by modern browsers, and so-called polyfills could be added for older browser versions.81 However, the deployment software used to combine CBA ItemBuilder tasks to tests might add additional requirements to be available to run assessments successfully. DIPF/TBA provides tools for online assessment (e.g., IRTlib, see section 7.5) that require a web browser that supports WebAssemblies. Using the TaskPlayer API, additional deployment software can be implemented with other server technology and for all browsers or browser-components supporting React.

Besides the deployment software, special attention regarding browser support is also required for content that is integrated into CBA ItemBuilder items via ExternalPageFrames (see section 3.14). These extensions, created by JavaScript / HTML programmers, are integrated into items using so-called iframes and must be checked to ensure that they function correctly in all browsers used for an assessment.

ExternalPageFrame).

Limitations, for example in playing videos, can also be caused by configurations of the web server (e.g. the configuration to support range HTTP requests).

Cross-Browser Compatibility: If the browsers used in online deliveries cannot be controlled and standardized, it is recommended to test the assessments in advance in different browsers. On Windows computers, the display and functioning of CBA ItemBuilder items in locally installed browsers can also be tested by using the regular Preview and copying the preview URL (see section 8.4.1). To check cross-browser comparability of CBA ItemBuilder deployments (see section 8.4.1), an online deployment must be prepared first (for instance, using the R/Shiny example described in section 7.1).

A very efficient approach to testing the compatibility of assessments in different browsers is to use third-party vendors that provide web-based access to different browser versions on different devices (cloud web and mobile testing platforms, such as BrowserStack, or similar services).

Availability of (Embedded) Content: Content embedded Using ExternalPageFrame must not only be working in the browser that are expected to be used for an assessment. The embedded material must also be available during the assessment. The use of Local resources is required for offline deployment. Moreover, network connection must be stable and provide enough bandwidth for resources included as External resources (see section 3.14 for the configuration of components of type ExternalPageFrame).

Special attention is required when multiple assessments share the same internet connection, For instance, it must be possible to load large audio and video files fast enough, even if, for example in a class context, all test-takers share a network access. Factors that influence the bandwidth are besides the storage of data (upload of log data, result data and data for recovery, see section 7.2.9) especially the download of media files (images, videos, audio files) that have to be transferred from the server to the client. The underlying Taskplayer API (see section 7.7) provides methods to implement the pre-loading of media files.

7.2.3 Fullscreen-Mode / Kiosk-Mode

Test security (see section 2.10) can translate into different requirements, particularly for high-stakes assessments. To standardize assessments, the assessment content should fill the entire screen if possible. Depending on the deployment method, this goal can only be approximated (although not guaranteed in certain browsers or on mobile devices) using Fullscreen-Mode.

Fullscreen-Mode: In online assessments, a full-screen display of assessment content can be possible in (regular) browsers on computers with desktop operating systems (Windows, Linux, macOS), and Fullscreen-Mode must be initiated typically by a user interactions. However, since test-takers can exit full screen mode at any time, the assessment software might hide at least the item content when full screen mode is exited.

Mandatory Fullscreen: For assessments on desktop computers, a requirement can be to ensure that the assessment is only displayed if the browser is in full-screen mode. Since even browser-based assessment software can, to some extent, diagnose that the current view is part of a full-screen presentation, assessment software can make the full-screen presentation mandatory. If a test-taker exits the full-screen presentation, different actions can be considered: Access-related paradata (i.e., a particular log event) can be created, a test administrator can be informed using, for instance, a dashboard (see section 7.2.4), or the assessment content could be faded-out, advising test-takers to return to the full-screen presentation before an assessment can be continued.

Kiosk-Mode: In offline deployments (or if a pre-defined browser is provided for online deployments), a so-called Kiosk-Mode prevents users (i.e., test-takers) from exiting the full-screen presentation of assessment content. Various browsers and add-on’s offer Kiosk-Mode for different operating systems. For instance, the offline player provided as part of the IRTlib-software (see section 7.5) provides a Kisok-Mode for Windows.

A free software solution for establishing test security on computers with Windows, macOS and iOS operating systems, which is used in a variety of application contexts, is the Safe Exam Browser (SEB). Since SEB is a (standard) HTML browser, which has only been supplemented with additional components and configurations for test security, this software can be combined in various ways with the CBA ItemBuilder deliveries.

7.2.5 Input and Pointing Device

Special considerations are required regarding the input devices used for point-and-click and text responses.

Touchscreen vs. Mouse-Click: Assessments on touchscreens (i.e., using touch-sensitive displays) require additional thoughts for at least two other reasons. First, it is inherent in touch operations that the place where the touch gesture is performed (using either a finger or a specific stylus) is not visible when the finger covers parts of the screen. Hence, if a small area needs to be clicked precisely, this property must be considered when designing the interactive item. Second, web browsers might treat touch events differently from click events. In particular, for content embedded as ExternalPageFrame, it is necessary to ensure that touch and click events are treated by the JavaScript / HTML5 content in all browsers as expected.

Touch devices might also, by default, use additional gestures, including pinch zoom gestures and, for Windows operating systems, the so-called charms bar (activated using a touch gesture over the edge of the screen).

Touchpad vs. Mouse-Click: If notebooks with touchpads are used, an external mouse might be considered for standardization purposes (i.e., to make sure that test-taker can answer the assessment regardless of their familiarity with touchpads), and touchpads might be deactivated to avoid distraction when typing and clicking is required.

(Onscreen) Keyboard and Text Entry: Text and numeric entries in assessments are usually recorded using a built-in or connected keyboard. Text input might automatically trigger an on-screen keyboard for devices with a touch screen. The operating system automatically displays the on-screen keyboard and then reduces the space available on the screen for the item content. In addition, the on-screen keyboard might allow the test-taker to exit Kiosk-Mode.

Careful usability testing (and if a Kiosk-Mode is planned, robustness checks regarding test security) are necessary, particularly on touch devices.

7.2.6 Authentification / Account Management

Regardless of the specific software used for test delivery, the following terms are relevant to web-based assessments.

Session: An essential concept for online assessments is the so-called session. Even if data collection takes place entirely anonymously (i.e., without access restrictions), all data belonging to one person should ideally result in one data record. Accordingly, the session is the unit that bundles the data that can be jointly assigned to one (potential) test-taker. With the help of client-side storage (i.e., session storage, cookies, or local storage), the ID of a session, which is usually created randomly, can be stored so that the data for an assessment, if interrupted and continued from the same test-taker, can be matched. Sessions IDs can be stored within a particular browser on a computer if the client-side storage is activated and the test-taker agrees to store client-side information.

Log-in/Password vs. Token: Authentication for online assessments can use Log-in and Password (i.e., two separate components) or a one-time Token (i.e., only one component). Often, there is no reason to arbitrarily separate log-in and password if the identifier is created as pseudonym only for a particular data collection. A distinction can be made with regard to the question of whether the assessment platform checks the validity of the identifier (access restriction) or if the identifier is only stored.

Protection of Multiple Tabs/Sessions: Finally, when a particular authentication is implemented, it must be considered that the log-in can potentially occur multiple times and in parallel. The delivery software might ensure that an assessment with one access (e.g., log-in/password or token) cannot be simultaneously answered and accessed more than once.

7.2.7 Task-Flow and Test Assembly

As described in section 2.7 items (i.e., in case of CBA ItemBuilder assessments Tasks) can be combined in different ways to assessments. Using the CBA ItemBuilder, the assembly of individual assessment components into tests is part of the test deployment software. An assessment component is any component that can be meaningfully used as component, including a log-in page, cover pages, instruction pages, the actual items or units, and exit or closing pages shown to test-takers.

Linear Sequence: The most common arrangement of assessment components is a Linear Sequence. CBA ItemBuilder Tasks can create a sequence that might contain items, prompts, feedback pages and dialog pages. As long as the same sequence is used for all test taker, all deployment software can be used that support linear sequences.

Booklets: If different sequences or combinations of CBA ItemBuilder Tasks are used (of which each target person works on exactly one), the procedure corresponds to so-called Booklets (or test rotations). Depending on the study design, the assignment of a test taker to a particular booklet or rotation is either done in advance (usually assigned to log-ins or tokens, see 7.2.6), or the assignment is done on-the-fly when a session is started.

Skip Rules: The deployment software for CBA ItemBuilder assessments is also responsible for omitting or skipping Tasks depending on previous responses. This functionality is only required for the test deployment software, if the skip rule can not be implemented within CBA ItemBuilder Tasks (using, for instance, multiple pages and either conditional links or the CBA ItemBuilder task’s finite-state machine).

Multi-Stage Tests and Adaptive Testing: The more complex and elaborate the calculations become, which are required for the selection of questions (or pages in a CBA ItemBuilder task), and the more items in total are available, the more complicated the implementation within a (single) CBA ItemBuilder task becomes. Accordingly, special delivery software is required to implement complex multi-stage tests and item- or unit-based, uni- or multidimensional adaptive tests using CBA ItemBuilder items (see section 7.5).

7.2.8 Time Limits across Tasks

Analogous to a sequencing of assessment content across individual parts (such as Tasks in the case of CBA ItemBuilder created assessments), the time restriction across tasks can be provided by a deployment software.

Time Measurement: The requirement of a time limit across tasks typically exists only for the actual items of a (cognitive) test, while before and possibly after the time-restricted part further content, for instance, with instruction pages and a farewell page are administered. Moreover, if a time limit across tasks is used, one or multiple additional pages are often shown, when the timeout occurred.

Remaining Time: When the minimal time to solve a particular item is known or can be approximated, the deployment software might apply the time limit already at a Task-switch (i.e., when in CBA ItemBuilder based assessments a new task is requested based on a NEXT_TASK-command, see section 3.12.2), if the remaining time (i.e., the time before the time limit will occur) is below the expected minimal time that would be required to work on the (requested) next task.

Result-Data (Post-)Processing and Timeouts: Time limits not only affect the presentation of items, meaning that within a particular section of the assessment no more items (i.e., Tasks within CBA ItemBuilder project files) are presented, when a timeout occurred. Time limits also affect the (missing) coding of the responses in the current task and in all subsequently tasks, not presented because of a timeout.

First, it is important to note, that the deployment software requests the ItemScore (see section 7.7) for the current item (i.e., Task within an CBA ItemBuilder project file) when a timeout occurs. Second, within this task, variables (i.e., classes) can be coded as Not Reached (NR), if the corresponding questions were not yet presented in a Task with multiple pages. Hence, the differentiation between Not Reached (NR) and Omitted Responses (OR) for Tasks with multiple parts must be included in the (regular) CBA ItemBuilder scoring (see section 5.3.11).

For assessment components not presented because of a timeout (or because an interviewer aborted the test, see section 7.2.4), no ItemScore will be available, because it is computed only when a Task is exited. Hence, the deployment software is required to determine the remaining tasks that would have been presented without the interruption and assign the appropriate missing code, either Not Reached (NR) in case of a timeout or Missing due to Abortion if an interviewer aborted the assessment.

7.2.9 Date Storage and Test-Resume

Regardless of the deployment software, there are some data types that arise in all CBA ItemBuilder-based data collections. These are briefly described in this section.

ItemScore (Result Data): When exiting CBA ItemBuilder items by a task-related command (see section 3.12.1) or by an external navigation request of the delivery environment (timeout or test abort), the defined scoring rules (see section 5.3) are evaluated. In the current version of the CBA ItemBuilder (i.e., for the REACT generator), the ItemScores are provided by the runtime as JSON data and collected by the delivery platform.

Traces (Log Data): In addition to the ItemScore data, trace data are provided automatically by the CBA ItemBuilder runtime. The data are provided as individual log events and can be collected by the deployment software or even be analyzed already instantly during the assessment.

Snapshot: In addition to the two data collected for further empirical analyses, snapshots of all internal states that represent the tasks are provided by the runtime so that even in case of interruptions, the possibly complex CBA ItemBuilder tasks can be continued at the last processing state.

Assessment content that is embedded into CBA ItemBuilder items using ExternalPageFrames can use the Snapshot of the CBA ItemBuilder Runtime to implement persistence of content across page changes (see section 4.6.5).

7.3 Using CBA ItemBuilder Items with R (Shiny Package)

ShinyItemBuilder allows using CBA ItemBuilder items in web-based applications created with R/Shiny. This allows local administration of tests (from RStudio) and online adminsitration using, for instance, www.shinyapps.io (or hosting shinyproxy).

The use of R/Shiny for assessments is advantageous for two main reasons: It combines the data collection (seemingly) with the (psychometric) use of gathered responses data and collected log events. Moreover, since an easy-to-use infrastructure for Shiny applications exists, it enables a swift approach to run online assessments without setting up a dedicated hosting environment. Although this hosting might be less performant than hosting using more standard web technologies, item authors can use R-functions to customize the test assembly (e.g., for multi-stage and adaptive testing).

Concerning the different modes of test deployment (see section 7.2.1, R/Shiny can be used for stand-alone deployment either locally, or online.

Local: The assessment is started directly from R locally, and test-taker answer items in a browser on the same computer.82

Online: The assessment is hosted using R / Shiny on a server (e.g., using www.shinyapps.io), and test-takers answer the items in a browser, either on a desktop device or even on a mobile device.

Note that even if an online deployment should be created using the R package ShinyItemBuilder, the preparation is done locally in R. After completing the preparation, the Shiny app is deployed to the online server.

7.3.1 Use of CBA ItemBuilder Project Files in ShinyItemBuilder

The R/Shiny package ShinyItemBuilder needs to know which items should be administered. This can be defined by providing an item pool, created from a folder with CBA ItemBuilder project files or a list of CBA ItemBuilder project files and optional tasks.

item_pool <- getPool(path="PATH-TO-YOUR-IB-PROJECTS")If no additional function for navigation is defined (see section 7.3.3), the project/tasks defined in the item pool will be administered as linear sequence. Hence, you can either change the order of project files / tasks in the object item_pool after the call of getPool(), or provide a specific function navigation (see section 7.3.3).

The configuration is done using a list of attributes and functions, created with the function getConfig().

assessment_config <- getConfig()Various options that can be defined include the visual orientation and zoom of items, as described in the help:

?ShinyItemBuilder::getConfig

7.3.2 Start and End of Assessments using ShinyItemBuilder

The default configuration to start the assessment that the R/Shiny package ShinyItemBuilder will create a new identifier, when the assessment is loaded the first time from a particular browser. The identifier is stored in the local session storage, so that the same identifier will be used if the test-taker closes and re-opens the page (or reloads the page). Using this identifier, the test-taker will always return the last visited item. If the last item was ended, an empty page will be presented and the function end defined in the configuration will be called.

The function end can be defined in the configuration to implement different authentication workflows.

Multiple Runs: A new identifier is created automatically when the URL is visited the first time (or if the application is started in a local deployment). The identifier is stored either in the session storage (default or sessiontype="sessionstorage") , in the local storage (sessiontype="localstorage") or using cookies (sessiontype="cookie"). As long as the identifier is stored, the started session will be continued.83 After the last item the function end will be called, that is defined in the object assessment_config using the function getConfig():

assessment_config$end=function(session){

showModal(modalDialog(

title = "You Answered all Items",

"Please close the browser / tab.",

footer = tagList(actionButton("endActionButtonOK", "Restart"))))

}This function is called when the last item was shown (i.e., if the function navigation returns -1). The Shiny actionButton("endActionButtonOK", "Restart") allows test-taker to re-start the assessment.

Single Runs: If the function end does not include the action button (i.e., if the footer is an empty tag-list: footer = tagList()))), the test-taker will not be able to start the assessment again, once the last item is reached. Alternatively, the end function can also be overwritten to re-direct to another URL:

assessment_config$end=function(session){

session$sendCustomMessage("shinyassess_redirect",

"https://URL-TO-REDIRECT.SOMEWERE/?QUERYSTRING")

}Authentication: If the assessment is configured with sessiontype="provided", the object assessment_config created with the function getConfig() can contain a custom login -function:

assessment_config$login=function(session){

showModal(modalDialog(

tags$h2('Please Enter a Valid Token and Press "OK".'),

textInput('queryStringParameter', ''),

footer=tagList(

actionButton('submitLoginOK', 'OK')

)

))

}This function is shown, if the assessment is not started with a query-string parameter that includes a parameter with the name defined in the config assessment_config$queryStringParameterName (default is token). If the parameter is provided, the function assessment_config$validate is called to verify that the token is valid.

7.3.4 Score Responses in R

CBA ItemBuilder tasks provide a scoring (see chapter 5) that can be evaluated and reused in R.

Retrieve ItemBuilder Scoring: The R package provides a template for the score-function, that can be used to extract information from the CBA ItemBuilder provided ItemScore (see section 7.2.9). Adaptation of this function is necessary if selected responses of the already administered tasks are to be used for test sequencing (e.g., if ShinyItemBuilder is used for adaptive testing). The score-function is called automatically, after the administration of one item is completed.

7.3.5 Feedback in R using Markdown/knitr

As discussed in section 2.9.2, a technical platform for report generation is necessary to provide instant feedback after an assessment is completed. In the ecosystem of R/Shiny, the knitr package provides an easy-to-use approach to include dynamic documents to dynamically generated documents.

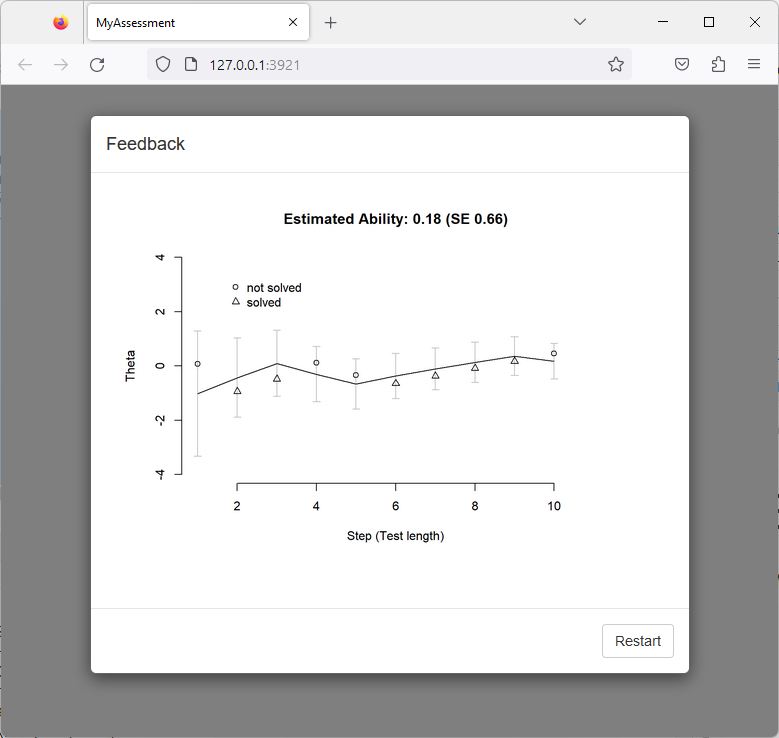

The example in vignette("feedback") based on a selection of items provided as demo01 illustrates how instant feedback can be created using markdown and kntir. Figure 7.1 provides another example.

7.3.6 Data Storage and Data Access

The package ShinyItemBuilder illustrates how CBA ItemBuilder items can be used with R/Shiny, ready to use for small-scale studies that can be hosted, for instance, on shinyapps.io. Date are stored in R in a global variable runtime.data, persisted in a folder configured using the argument Datafolder (default value is _mydata):

assessment_config <- getConfig(Datafolder="folderName")Data are stored for each session identifier in a *.RDS file that can be loaded in R using the function readRDS(). By default, data are only stored in the current instance (i.e., data will be lost if the application will be put into a Sleeping state or the instance is deleted or newly created on shinyapps.io).84

To access data online, the package illustrates how a simple Maintenance interface could look like. If a maintenance password is provided, the keyboard shortcut Ctrl + X (configured as argument to the function getConfig(maintenanceKey=list(key="x", ctrl=T, shift=F, alt=F), ...)) or the argument ?maintenance in the query string (configured as argument to the function getConfig(maintenanceQuery = "maintenance", ...)) opens a shiny dialog page. (see vignette("maintenance")).

vignette("datastorage").

7.3.7 Side Note: Interactively Inspect Log Events of CBA ItemBuilder Tasks

The R package ShinyItemBuilder can also be used to directly log events collected by a CBA ItemBuilder task live in RStudio.

This is illustrated with the following example:

item_pool <- getDemoPool("demo02")

assessment_config <- getConfig(Verbose = T)

shinyApp(assessmentOutput(pool = item_pool,

config = assessment_config,

overwrite=T),

renderAssessment)Note the argument Verbose = T that is provided to the function getConfig. In verbose mode, ShinyItemBuilder will print detailed information to the R output window, while the items can be interacted with in a web browser using shiny.

7.4 Using CBA ItemBuilder Items with TAO (using Portable Custom Interactions)

Tests in QTI-compatible platforms such as TAO are defined as a sequence of QTI items. Each QTI item contains one or more QTI interactions. QTI interactions can be Common Interactions, Inline Interactions, Graphical Interactions, or (Portable) Custom Interactions (PCI). If TAO is used as the assessment platform, then QTI items can be created, managed, and edited directly in TAO. For use cases where assessment content cannot be implemented with QTI interactions, (Portable) Custom Interactions (PCI) can be integrated.85

7.4.1 Prepare CBA ItemBuilder Project Files for fastib2pci-Converter

For item authors without experience in software development or if existing assessment content is already available, the CBA ItemBuilder can be used as authoring tool for Portable Custom Interactions (PCI), for instance, to create Technology-Enhanced Items (see section 2.3) that can be used in TAO. Portable Custom Interactions that can be used in QTI-items can be created using either a single or a linear sequence of multiple CBA ItemBuilder Project Files.

To create a Portable Custom Interactions with CBA ItemBuilder Project Files, the fastib2pci can be used. It allows creating Portable Custom Interactions with either single or multiple Project Files. As a necessary prerequisite, one or multiple Tasks must be defined within each Project Files. If multiple Project Files are used, an alphabetical order is used. The same applies to the order of Tasks, if within one CBA ItemBuilder Project File multiple Tasks are defined.

7.4.2 Generating PCI-Components using fastib2pci-Converter

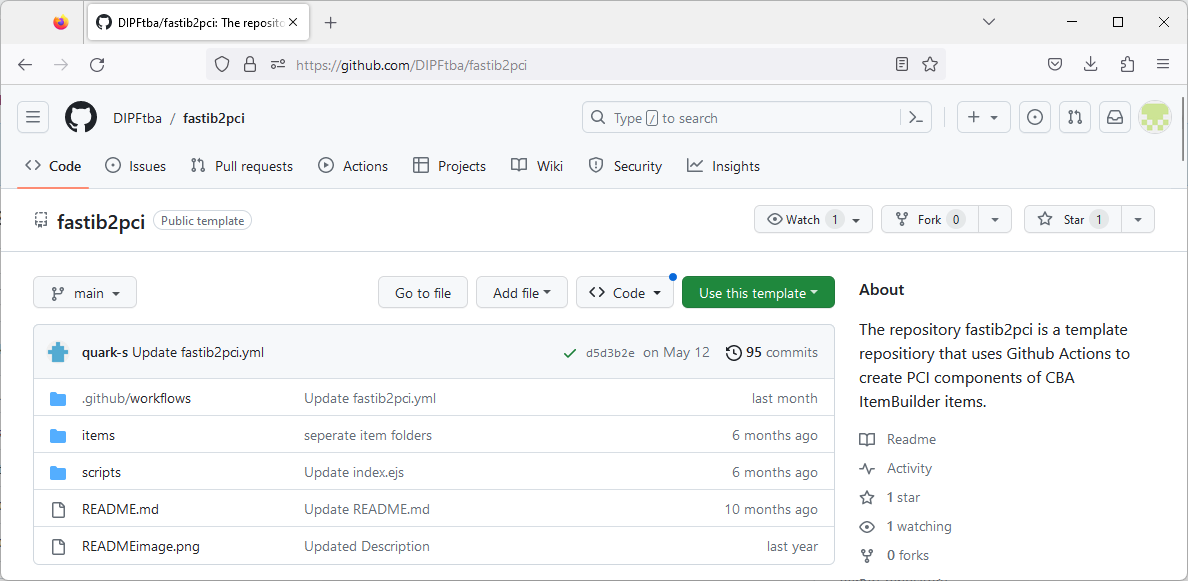

The converter fastib2pci is provided as a Github project template that contains a so-called CI/CD worker (i.e., Github actions). Using the converter requires an account at github.com. After creating an account and log-in to your profile, navigate to the repository fastib2pci and push the button ‘Use this template’ as shown in Figure 7.2.

FIGURE 7.2: Repository fastib2pci shows the button Use this template after log-in to github.com.

Provide a new project name and make sure to select Include all branches.86 As soon as the project is created, the CI/CD worker included in the template repository fastib2pci will create the PCI components using the example CBA ItemBuilder Project Files included in the folder items. After the worker completed, the PCI components can be downloaded from the repository in the section Releases (or using the following link: https://github.com/{your github account name}/{repository name}/releases).

To use the converter with your own CBA ItemBuilder Project Files, create a new directory (e.g., component_1), upload CBA ItemBuilder Project Files to the new directory, and delete the example directories ‘test_1’ and ‘test_2’. After committing the files, the CI/CD worker will automatically update the PCI components and provide them in the section Releases. Note that you can use a git-client (see section 8.3.2.2) to work with the repository.

7.4.3 Flavors of PCI-Components Created by fastib2pci-Converter

For each sub folder of the directory items/ one *.Tar-file (tarball archive)87 is generated. Accordingly, if you plan to use multiple PCI-components for a particular assessment, only one git-repository is required.

The *.Tar-files generated by the fastib2pci-converter contain different versions of the PCI-components, using the identical CBA ItemBuilder Project Files in the repositories items/-folder. There are two reasons to create different flavors:

IMS PCI vs. TAO PCI: The implementation of PCI support in TAO changed over the last years from a TAO-specific implementation (TAO PCI) towards the standard specification (IMS PCI). Both versions are generated by the fastib2pci-converter.

Generic vs. Specific: CBA ItemBuilder content is embedded using PCI-components using iframes. All resources used in CBA ItemBuilder Project Files are embedded in the generated Specific PCI-components (required to make the Portable). However, since TAO might have, depending on the configuration, a size limitation, the fastib2pci-converter also creates a Generic version that only includes the CBA ItemBuilder runtime in the PCI-component (resulting in very small file sizes of about 150 KB), referring to static GitHub Pages for hosting the actual item content.

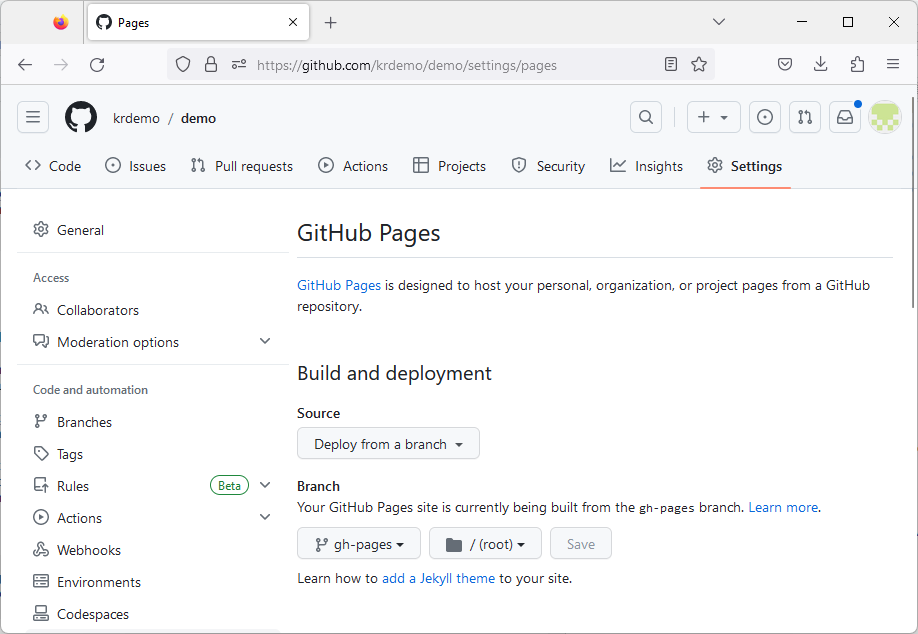

fastib2pci-converter, github pages must be enabled.88

If GitHub Pages are activated in the project (see Figure 7.3), the CI/CD worker worker will automatically prepare the hosting for the item content as required for the Generic PCI-components.

FIGURE 7.3: Example repository settings showing activated GitHub Pages.

https://{your github account name}.github.io/{repository name}/{sub directory name}/

If, for item protection reasons, the content can not be freely accessible, then using the Generic PCI components requires providing a (secured) static hosting of the content in the Branch gh-pages, generated by the fastib2pci-converter. This effort is not required for the Specific PCI components.

7.4.4 Side Note: Archive Assessment Content using GitHub Static Pages

For various reasons, it can sometimes be reasonable to provide assessment content statically (i.e. without data storage). In addition to screenshots, CBA ItemBuilder tasks, for example, can also be displayed as interactive web pages. With the converter fastib2pci this can be easily realized if you have your GitHub Pages activated in your account (either using public repositories or a GitHub Pro plan). See the public GitHub Pages for the template repository fastib2pci as an example: https://dipftba.github.io/fastib2pci/

7.5 Using CBA ItemBuilder Items with the IRTlib-Software

7.5.1 IRTlib-Editor vs. IRTlib-Player

The IRTlib Deployment Software consist of two different parts. An IRTlib-Editor to configure studies, and a IRTlib-Player to run studies. The IRTlib-Player requires a valid configuration of a deployment, that can be created, changed and modified with the IRTlib-Editor.

The IRTlib-Player supports different deployment modes for assessments, either offline (e.g., from USB stick) or online (e.g., stand-alone with a URL pointing to a dedicated server prepared for hosting the software).

The IRTlib-Editor used to configure and define studies can currently be use only offline.89 The identical assessment content (i.e., CBA ItemBuilder Project Files containing one or multiple tasks) can be used for online and offline delivery.

7.5.2 Runtime Requirements (Offline and Online)

An installation of the IRTlib-Editor is not necessary for offline use, because the software (i.e., the IRTlib-Player and -Editor) is prepared to run without installation (currently only under Windows).

Readiness Tool (offline): A simple command-line readiness-tool is provided to check if a (Windows) computer fulfills the requirements to run the IRTlib-Player for offline use. The readiness tool is configured with command-line parameters and, if so configured, also forwards the command-line parameters to the player. This allows the readiness-tool to always be started before the player, or to be called after the player only in the event of an error.

The IRTlib-Player for online deployments is provided as docker image. For preparation and configuration of online deployments, file management of CBA ItemBuilder project files and configuration files the offline IRTlib-Editor can be used.

Requirements (online): Unlike the CBA ItemBuilder runtime itself (see section 7.7) the IRTlib-Player for online deployments has additional browser requirements for online data collection. Online collections are possible in browsers that support the execution of WebAssembly (WASM).90

7.5.3 Study Configuration

Global configurations within the IRTlib-Editor concern the available CBA ItemBuilder Runtimes. Hence, before using the IRTlib-Editor for CBA ItemBuilder deployments it is necessary to add at least one Runtime. Moreover, for each study it needs to be configured, if either Login91 and Password or a access Token will be required.

Deliveries (i.e., studies) can be configured in the IRTlib-Editor. Studies need a unique Name and allow for an additional Label. Additional configurations for Studies include settings regarding the authentication of test-taker, the requested scaling of content, the configuration of a menu for test-administrators and the expected behavior, when a test-taker finishes a session. Finally, the IRTlib-Editor allows to define the Routing between Test Parts.

Each Study needs at least one Test Part, and currently two types of Test Parts are supported: CBA ItemBuilder and SurveyJS.

7.5.4 Configuration of CBA ItemBuilder Test Parts

To prepare a Test Part of type CBA ItemBuilder, the CBA ItemBuilder project files need to be added to the configuration using the IRTlib-Editor. CBA ItemBuilder Project Files (with Tasks and Scope) can be added at different sections of a Test Part configuration.

After adding a CBA ItemBuilder Project File the Tasks defined in that Project File are available in the IRTlib-Editor. The additional Scope allows to use an identical Task multiple times. The section info allows to add items (i.e, CBA ItemBuilder Project Files with Tasks and Scope) to the following slots:

- Prefix: Items administered in the beginning of a Test Part (e.g., a cover page, an introduction or tutorial etc.)

- Epilog: Items administered at the end of a Test Part (e.g., a thank-you page etc.)

- Timeout: If the Test Part is time restricted, one or multiple items can be added that will be administered, if a Timeout occurs in the Test Part

The main items that should be administered between Prefix and Epilog can be added to the section Items. If the option Routing is not activated, these items are administered in the sequence defined in the view Items. If Routing is activated, the View Routing allows to define the sequence of items (i.e., CBA ItemBuilder Project Files with Tasks and Scope) using the Visual Designer.

7.5.5 Configuration of Item Pools

For the definition of sequences, results of concrete items (i.e., Classes with scoring conditions and Result Texts, see chapter 5) can be accessed directly. For adaptive tests, in which the current item (or the list of current items) is selected using IRT-methods (see section 2.7), the mapping of the scoring defined within the CBA ItemBuilder Project Files and the variable \(u\) (e.g., \(0\) for no credit, \(1\) for full credit and \(0.5\) for partial credit), the IRTlib-Editor provides the additional concept of an Item Pool.

7.5.6 Configuration of Codebooks

Each item (i.e., a particular Task in a CBA ItemBuilder Project File administered in a particular Scope) provides a number of result variables (i.e. Classes, see section 1.5.1) as part of the ItemScore, when the presentation ended for a particular task either because of a navigation-related command (see section 3.12.2) or because of a time limit (see section 7.2.8). The Codebook allows to map the values provided by the CBA ItemBuilder scoring into variables with labels and value labels, as required for data sets.

Missing Value Coding: If a response was provided, either the name of the scoring condition (hit/miss) or the Result Text represents the value of the variable. Within the tasks, the defined scoring (see section 5.3.11) is expected to differentiate between Not Reached (NR) and Omitted Response (OR), if no response is provided. Hence, the values provided for classes (i.e., variables) of Tasks (i.e., for one or multiple items) are missing value coded based on the CBA ItemBuilder scoring. However, there are a few processing steps required by the IRTlib-Player to apply the missing value coding completely:

If a timeout or an abort by the test administrator / interviewer occurs, the classes (i.e., variables) within the current task (i.e., the task in which the test part was aborted or the task that was ended by a timeout) the Not Reached (NR) codes are changed to either Missing due to Abort or Missing due to Timeout.

In the next step, the remaining tasks are determined that would have been administered if the timeout or the abort would not have happened. The classes (i.e., variables) of these tasks are then also missing-coded as either Missing due to Abort or Missing due to Timeout.

Finally, all remaining tasks are determined (i.e., the tasks that are neither administered before the timeout or the abort and would have also not been administered if no timeout or abort had occurred). The classes (i.e., variables) for these tasks are missing-coded as Missing by Design.

Taking all three steps together the resulting data set of the obtained item score can be transformed into a regular rectangular data set, with one column for each class (i.e., variable), where the number of classes (i.e., variables and thereby columns) is known in advance as soon as all pairs of CBA ItemBuilder projects files and task names are defined and the CBA ItemBuilder project files are available.

For test designs with a unit structure (i.e., multiple items within a task), a differentiation between Not Reached (NR) and Omitted Responses (OR) needs to be included in the scoring definition of the CBA ItemBuilder Tasks. The IRTlib software then uses this differentiation to differentiate between normal responses, Missing by Design, Not Reached (NR) / Omitted Response (OR), Missing due to Abort, and Missing due to Timeout even in the case of complex task-flows or test assemblies that may depend on given responses, conditions and skip rules or even so-called pre-load variables (i.e., values assigned in advance to logins or tokens or values provided at the start of an assessment, see section 7.5.3).

7.5.7 Data Collected by the IRTlib-Software

Digitally-based assessments with CBA ItemBuilder Tasks create results data (based on the scoring definition, see chapter 5), raw log events (automatically generated log events), and additional user-defined log events (triggered either in the finite-state machine or in content embedded using ExternalPageFrames).

Raw Data Archives: The data stored by the IRTlib-Player always have the reference to the so-called Session. If a login or token is used as Person Identifier to authenticate test-takers, the raw data of a session are stored in a file {personidentifier.zip}. If there was no login in a particular session (yet), or if the study is configured to use a random identifier, the raw data archive will be named according to this (randon) ID. The raw data archive. is a zip archive that can contain multiple files. The files ItemScore.json and Trace.json contain the item scores and log data provided by the CBA ItemBuilder Tasks in JSON format. The file Log.json gathers additional log data provided by the IRTlib-Player and the file Snapshot.json contains all information required to restore the state of a Task (used, for instance, for crash recovery).

Monitoring Data: After the completion of an individual assessment, summarized status information can also be provided directly by the IRTlib-Player. Using this functionality, it is possible to react to key measures (e.g., particular time measures) already during data collection without the need to process raw data archive containing the log or result data. The status information can either be written to a file (monitoring file) or passed in an online assessment as part of a forwarding URL. Computation of monitoring information is defined in the visual editor.

The monitoring data are created as variables in the visual editor and are stored as list of variable-value pairs. In the JSON file the variable-value pairs are stored in the following form:

{

"ExampleDateTime": "2021-08-02T10:25:58.6209884+02:00",

"ExampleInteger": 42,

"ExampleString": "Any String",

"ExampleDecimal": 3.1415926

}Please note that the representation of the values in the JSON format differs slightly depending on the data type.

7.6 Using CBA ItemBuilder Items in SCORM Packages (with xAPI)

Assessment content embedded into Learning Management Systems (LMS) can become Open Educational Resources. Open standards, such as the Sharable Content Object Reference Model (SCORM) described how content can be packaged into a transferable ZIP-archives, called Package Interchange Format to be used in different LMS that support SCORM.

Flavors of SCORM-Packages created by the fastib2scorm-converter

- SCORM 1.2

- SCORM 2004

7.6.1 Prepare CBA ItemBuilder Project Files for fastib2scorm-Converter

To create a SCORM packages with CBA ItemBuilder Project Files, the fastib2scorm can be used. It allows creating SCORM packages with either single or multiple Project Files. As a necessary prerequisite, one or multiple Tasks must be defined within each Project Files. If multiple Project Files are used, an alphabetical order is used. The same applies to the order of Tasks, if within one CBA ItemBuilder Project File multiple Tasks are defined.

7.6.2 Generating SCORM Packages using fastib2scorm-Converter

The converter fastib2scorm is provided as a Github project template that contains a so-called CI/CD worker (i.e., Github actions, see also 7.4.2). Using the converter requires an account at github.com. After creating an account and log-in to your profile, navigate to the repository fastib2scorm and push the button ‘Use this template’ (see also Figure 7.2 above for the similar approach used for the fastib2pci-converter).

7.6.3 General Data Provided to the LMS

SCORM packages that consist of a single CBA ItemBuilder task or a linear sequence of tasks automatically return the information summarized in Table 7.1 to the Learning Management System (LMS) without further configuration:

Completion: If all tasks in a SCORM component are administered, the

cmi.completion_statusis reported ascompleted, otherwise eitherincompleteis reported (if any user interaction with the SCORM content were recorded) ornot attempted(if the SCORM component was loaded, but no interactions were recorded).Recent Task: If a SCORM component is created with multiple CBA ItemBuilder Tasks, the recent Task name is reported as

cmi.core.lesson_location(1.1 / 1.2) orcmi.location(2004 2st, 3nd, 4th) . If a SCORM component is resumed, the component is continued with this Task.Progress: If multiple CBA ItemBuilder Tasks are combined as SCORM component, the progress (i.e., the number of already completed Tasks) is reported as

cmi.progress_measure(2004 2nd, 3rd, 4th).Total Time and Session Time: The total time a SCORM component was used (accumulated across multiple visits) is reported as

cmi.core.total_time(1.1 / 1.2) orcmi.total_time(2004 2nd, 3rd, 4th). The time of the last session is reported ascmi.session_time(2004 2nd, 3rd, 4th).Suspend Data: The snapshot of started CBA ItemBuilder Tasks are required to resume the Tasks. If possible (i.e., if feasible within the restrictions of the SCORM format definition) the (compressed) JSON-Snapshot is provided as

cmi.suspend_data. Note that the max size varies across SCROM versions (1.1 / 1.2: 4096 characters; 2004 2nd edition: 4000 characters; 2004 3rd / 4th edition: 64000 characters).

| Data Model | Description | Versions |

|---|---|---|

cmi.completion_status |

Completion status, i.e., completed, incomplete, not attempted, unknown

|

(all) |

cmi.core.lesson_location |

Recent Task, i.e., the name of the last visited CBA ItemBuilder Project / Task used to resume | (1.1 / 1.2) |

cmi.location |

(see cmi.core.lesson_location) |

(2004 2st, 3nd, 4th) |

cmi.progress_measure |

Value between 0 (0% and) and 1 (100%) indicating the progress within the component | (2004 2nd, 3rd, 4th) |

cmi.core.total_time |

Accumulated total time | (1.1 / 1.2) |

cmi.total_time |

(see cmi.total_time) |

(2004 2nd, 3rd, 4th) |

cmi.session_time |

Time of the last session | (2004 2nd, 3rd, 4th) |

cmi.suspend_data |

JSON string to restore tasks states | (all, but varying size limits) |

Not supported yet: cmi.core.exit

|

Exit status, i.e., time-out, suspend, logout

|

(1.1 / 1.2) |

Not supported yet: cmi.exit

|

(see cmi.core.exit) |

(2004 2nd, 3rd, 4th) |

Not supported yet: cmi.core.entry

|

First attempt ab-initio or resume

|

(1.1 / 1.2) |

Not supported yet: cmi.entry

|

(see cmi.core.entry) |

(2004 2nd, 3rd, 4th) |

7.6.4 Report Scoring Results Provided by CBA ItemBuilder Tasks to the LMS

The CBA ItemBuilder scoring (see Chapter 5) consists of a list of Classes, each providing one active hit (or miss) at a time, and optionally a string or numeric result (called Result-Text). Additionally, scoring is provided in the form of variables with values.

-

CBA ItemBuilder Scoring: Raw results as provided by the CBA ItemBuilder Tasks are converted to the

cmi.interactions-structure, defined in the SCORM standard, using the fields. For each class and each variable, acmi.interactions-entry is created with a unique key (id) and a value (learner_response).

Classes:

-

id: {Project-Name}.{TaskName}.{ClassName}

-

learner_response: Hitname | ResultText

Variables:92

-

id: {Project-Name}.{TaskName}.{VariableName} -

learner_response: Type | VariableValue

7.6.5 Mapping of Scoring Result to Indicate Success

The transmission of results-data from SCORM packages embedded in learning management systems in the form of cmi.interactions is sufficient to make all data available to the LMS for later use. However, it is not sufficient to report the results in a way, that the LMS can understand and feedback to teachers or course administrators. For that purpose, an additional mapping of the raw results to correct responses / incorrect responses is required, so that raw scores, success and credits can be derived.

TODO: We need to define a codebook structure for that purpose.

-

Raw Score:

cmi.core.score.rawcmi.core.score.maxcmi.core.score.min

-

Success:

cmi.success_statuscmi.core.creditcmi.core.lesson_status

| Data Model | Description | Versions |

|---|---|---|

cmi.success_status |

… | (all) |

| … | … | … |

7.6.6 Trace-Data using xAPI-Statements

Additional behavioral data gathered inside of SCORM package using the CBA ItemBuilder runtime can be stored using xAPI statements. The following statements are provided by default, storing the data provided by the CBA ItemBuilder runtime (see section 7.2.9):

-

Traces (Log-Data) : JSON messages informing about log events inside the CBA ItemBuilder Tasks are provided as single xAPI statements, with the data provided in the

objectpart:

{

"actor": { "mbox": "mailto:user@example.com","name": "User Name" },

"verb": { "id": "https://example.com/verbs/logged", "display": { "en": "logged" } },

"object": {

"id": "http://example.com/system/events/123456",

"definition": {

"name": {

"en": "CBA ItemBuilder Event Log"

},

"description": {

"en": "Logged a CBA ItemBuilder event"

}

"data": "... JSON data provided by the runtime ..."

}

},

"timestamp": "2023-07-25T10:30:00Z"

}-

Scoring Results : JSON messages containing the scoring results of CBA ItemBuilder Tasks are provided as xAPI statements, with the data provided in the

resultpart:

{

"actor": { "mbox": "mailto:user@example.com","name": "User Name" },

"verb": { "id": "https://example.com/verbs/experienced", "display": { "en": "experienced" } },

"object": {

"id": "http://example.com/project-file/task",

"definition": {

"name": {

"en": "CBA ItemBuilder Project Name"

},

"description": {

"en": "User Name experienced Project Name / TaskName."

}

"result": {

"extensions": {

"https://xapi.itembuilder.de/extensions/itemscore": {

... ItemScore JSON ...

}

}

}

}

},

"timestamp": "2023-07-25T10:30:00Z"

}-

Snapshot : JSON messages containing the complete restore data of CBA ItemBuilder Tasks are provided as xAPI statements, with the data provided in the

resultpart:

{

"actor": { "mbox": "mailto:user@example.com","name": "User Name" },

"verb": { "id": "https://example.com/verbs/experienced", "display": { "en": "experienced" } },

"object": {

"id": "http://example.com/project-file/task",

"definition": {

"name": {

"en": "CBA ItemBuilder Project Name"

},

"description": {

"en": "User Name experienced Project Name / TaskName."

}

"result": {

"extensions": {

"https://xapi.itembuilder.de/extensions/snapshot": {

... Snapshot JSON ...

}

}

}

}

},

"timestamp": "2023-07-25T10:30:00Z"

}7.7 Using CBA ItemBuilder Items in Custom Web Applications (Taskplayer API)

This section briefly describes how software developers can use CBA ItemBuilder content in web applications.

The CBA ItemBuilder is the tool for creating individual assessment components. These can be items, instructions, units or entire tests. Typically, several CBA ItemBuilder projects must be used for the application. Each CBA ItemBuilder project file provides one or more entry points called Tasks. For a test section you then need a list of ItemBuilder project files and the corresponding task names to administer them, for instance, in a linear sequence.

CBA ItemBuilder Project Files are zip archives that contain the following components (see also section 8.3.3):

A: The information required at design time for creating assessment components with the CBA ItemBuilder (i.e., for editing content). The files are only required for opening and modifying the assessment components with the CBA ItemBuilder and the files are not required at runtime (i.e., when using the assessment components to collect data).

B: Resource files (i.e., images, videos, and audio files) in web-supported formats that are imported using the CBA ItemBuilder to design pages. The file names of resource files are linked in the CBA ItemBuilder to components (i.e., the resource files are required for item editing and at runtime).

C: Embedded external resources ( i.e., HTML, JavaScript, and CSS files also in web-supported formats) integrated into pages with

ExternalPageFrames/iframesare stored inside the zip archive. An HTML file is defined for eachExternalPageFrames/iframesas entry, but more files might be necessary.D: A

config.jsonthat allows rendering the item content with the CBA ItemBuilder runtime is also stored in the zip archive. Only theconfig.jsonfile and the two folders with the resources (resources) and the embedded resources (external-resources, that can contain sub-directories) are required for using the assessment components generated with the CBA ItemBuilder.E: A file

stimulus.jsonis also part of the CBA ItemBuilder project files that contains JSON-serialized, meta information about the tasks, such as the runtime version (runtimeCompatibilityVersion), the name (itemName) and the preferred size (itemWidthanditemHeight) as well as a list of all defined Tasks (tasks). This file also contains a list of required resource files (resourcesandexternalResources) that allows pre-caching the item before rending.

Taskplayer API: The required Runtime to embed CBA ItemBuilder items into browser-based assessments is provided as a JavaScript file (main.js) and a CSS file (main.css) for each version of the CBA ItemBuilder. Since version 9.0 the interface of the Taskplayer API provided by the JavaScript runtime remained stable, while the internal implementation is changed and updated when new features are implemented in the CBA ItemBuilder. To render an CBA ItemBuilder project of a particular version using the config.json file together with the two folders (resources and external-resources), the same version of the CBA ItemBuilder runtime (i.e., main.js and main.css) is required.

For individual linear sequences, the runtime provides navigation between the tasks directly. If skip rules or adaptive tests are to be implemented, then several runtimes can be combined for the administration of individual tasks or packages of several tasks. This approach also allows implementing a delivery platform that can handle ItemBuilder tasks of different versions.

For programming a CBA ItemBuilder delivery, the following points must be considered and implemented:

Provision of Static Files: To use CBA ItemBuilder items, the resources (directories resources and external-resources) must be made available (e.g. via static hosting). This can be done via arbitrary URLs, which are communicated via the configuration of the runtime.

Configuration: Via URL parameters or with a structure

cba_runtime_configdeclared in the global JavaScript scope (i.e. aswindow.cba_runtime_config) the runtime of the TaskPlayer API can be configured.Caching of Snapshots: Browsers can be closed, and assessments should be able to be continued afterward as unchanged as possible. Tasks can also be exited and revisited as part of between-task navigation. For these requirements, the runtime provides the state of a task as a so-called

snapshot, which the delivery software is expected to store and to provide for restoring the state of tasks. Therefore, for implementing a custom delivery, it is required to enable persistence of the snapshot data because these snapshots have to be made available to the TaskPlayer API for resuming and restoring tasks.Storing of Provided Data: For a data collection with CBA ItemBuilder items using the TaskPlayer API, the following two types of data must be stored: At definable intervals, the TaskPlayer API transmits the collected log data (referred to as

trace logs). These data have become the focus of scientific interest for the in-depth investigation of computer-based assessments and should always be stored. The direct results in (i.e., the so-calledItem Scores) are provided by the TaskPlayer API when the Tasks are switched and must also be stored. Snapshots, trace data, and item scores are each assigned to a person-identifier and a task so that they can be easily post-processed afterward.

An description of an example implementation of an Execution Environment using the TaskPlayer-API is provided (see EE4Basic in section B.5) together with a technical documentation for developers (see Reference in section B.5).